Table of Contents

QUICK SUMMARY:

A/B testing is a must-have strategy for agencies aiming to optimize campaign performance with data-backed decisions. In this guide, Lindsay Casey, AgencyAnalytics’ Paid Campaign Manager, reveals her proven process for Google Ads A/B testing and shares expert tips to drive success.

Your agency thrives on delivering measurable results, and in the world of Google Ads, every click counts. A/B testing—also known as split testing—is your secret weapon for removing guesswork to ensure that your Google Ads campaigns are driven by data, not assumptions.

In this guide, I’ll share everything you need to know to master Google Ads A/B testing, from selecting which variables to test to my advice for setting up effective tests for client campaigns. Let’s dive in!

What Is A/B Testing?

In the world of Google Ads, A/B testing involves presenting two versions of an ad to your client’s audience, splitting traffic between versions, and then comparing results to determine which ad performs better.

By running these controlled experiments, you gain a deeper understanding of what resonates with your client’s audience, whether it’s a particular headline, ad copy, visual element, or Call-To-Action. With A/B testing, every campaign decision is rooted in evidence, empowering you to drive measurable results and squeeze the maximum value from your client’s ad spend.

How Valuable is A/B Testing for Client Campaigns?

Let’s break down why A/B testing is one of the smartest moves you can make when running Google Ads campaigns for your clients:

Boost Conversions: Testing variables like ad headlines, messaging, and Call-to-Action buttons reveals which aspects of your client’s offering capture the audience's attention and drive more clicks. This data is invaluable for optimizing Google Ads campaigns to improve critical performance metrics like Click-Through Rate (CTR) and Conversion Rate.

Enhance Personalization: Insights from a split test help you uncover audience preferences, enabling deeper segmentation and more targeted messaging.

Maximize Ad Spend Efficiency: Running experiment campaigns ensures no budget is wasted on trial and error. You’ll know what works and what doesn’t—before scaling up campaigns.

Drive ROI: Small tweaks sometimes lead to big wins! Incremental improvements from testing compound over time, delivering a powerful boost to your Return On Ad Spend (ROAS).

Eliminate Guesswork: Data-driven decisions replace hunches, giving you confidence in your strategy.

Impress clients and save hours with custom, automated reporting.

Join 7,000+ agencies that create reports in under 30 minutes per client using AgencyAnalytics. Get started for free. No credit card required.

Already have an account?

Log inWhat Variables Should You A/B Test in Google Ads Campaigns?

The success of your Google Ads campaigns hinges on testing the right elements. Let’s explore the top variables to test in your ad campaigns:

Ad Headlines

Your headline is the first thing your client’s audience sees and is one of the best places to test ad variations. By presenting two headline variations, you uncover which approach resonates more, whether it’s emphasizing benefits, urgency, or a specific Call-to-Action (CTA).

For example, let’s say you’re targeting users with informational search intent. One version of your headline might focus on providing knowledge. The other could emphasize a comparison angle. Split testing these variations reveals which tone or value proposition captures the audience’s attention more effectively.

Headline A | Headline B |

|---|---|

Learn About the Top Tools for Bookkeeping Success. | Explore Bookkeeping Software That Saves Time. |

Weave in industry-specific language for the best results. For example, at AgencyAnalytics, we cater to an audience of marketing agencies. Some of the language we use includes “Win Back Billable Hours” and “Create Client Reports in Minutes.”

Ad Copy

Experiment with the tone and focus of your ad copy to uncover what resonates most with your audience. Incorporate relevant keywords to improve searchability and ensure your ad appears in front of the right audience.

Focus on addressing your audience’s pain points and offering solutions to encourage clicks. For instance, test whether users respond better to benefit-driven copy highlighting ease or savings versus feature-focused copy emphasizing specific functionalities or results.

Copy A (Benefit Driven) | Copy B (Feature Focused) |

|---|---|

Save Hours Every Week With Automated Reporting | Automate Reporting With Real-Time Data Sync |

This approach captures attention and drives higher engagement that aligns with user intent.

Calls to Action (CTAs)

The CTA is one of the most critical elements of an ad. Even small changes, like tweaking the wording or button design, significantly influence user behavior.

For example, let’s say you’re promoting a subscription service and want to test which CTA resonates more with users. One version might focus on urgency. The other might emphasize value.

CTA #1 (Urgency) | CTA #2 (Value) |

|---|---|

Start Your Free Trial Now! | Get 20% Off—Sign Up Today! |

Running this A/B test helps you determine if users respond more to the fear of missing out or the appeal of no-risk exploration.

Audience Targeting

A/B testing audience segments helps unlock invaluable insights into who engages most with your ads. By experimenting with different targeting approaches, you’ll pinpoint the groups that deliver the best results and refine your strategy for maximum impact.

For example, imagine you’re promoting an eco-friendly product. One test could compare two audiences:

Audience #1 (Demographics) | Audience #2 (Behavioral Segments) |

|---|---|

Target users aged 25–34 with an interest in sustainable living. | Focus on frequent online shoppers who have recently purchased eco-friendly products. |

By testing these segments, you’ll uncover whether lifestyle interests or shopping behavior drives more clicks and conversions. Analyzing these tests allows you to fine-tune your campaigns, ensuring ad spend goes toward the most engaged audiences.

Bid Strategies

Testing different bid strategies is another way to optimize Google Ads campaigns for cost efficiency and performance. Testing different approaches, such as manual vs. automated bidding, allows you to identify which strategy delivers the best results for specific campaign goals.

Here are some different bidding strategies to test for Google Ads:

Bidding Strategy | Use Cases |

|---|---|

Manual Bidding | Gives you full control over individual bids, making it ideal for campaigns where you want to closely manage cost-per-click (CPC) and adjust based on real-time performance. |

Automated Bidding | Leverages Google’s AI to optimize bids for your desired outcomes, such as maximizing conversions or impression share. |

Maximizing Clicks | This strategy is often effective for bottom-of-funnel campaigns targeting tight, high-converting audiences, where getting as much traffic as possible is critical. |

Target ROAS | Ideal for campaigns focused on profitability, this strategy adjusts bids to prioritize conversions that align with a specific ROAS target. |

Target Impression Share | Use this to dominate visibility for branded campaigns or ensure a high share of voice in competitive spaces. |

When testing bid strategies, run controlled experiments to compare how each approach impacts metrics like CPC, conversion rate, and overall ROI. These insights will help you balance cost efficiency with campaign goals, ensuring you’re allocating budgets where they’ll deliver the highest returns.

When I joined AgencyAnalytics, one of my first Google Ads experiments was a Target CPA test on our Social Media Analytics campaign. Previously, no Target CPA had been set, so we split the traffic:

- 50% of traffic continued with the existing strategy (no Target CPA).

- 50% of traffic was run with a Target CPA applied.

Over 30 days, the results spoke volumes:

- CTR increased by 20% on the test variant.

- Conversion rate skyrocketed by 123%.

Based on these results, we applied the Target CPA settings across the entire campaign, significantly improving its overall performance.

Visuals

While Google Search Ads rely on text, Google Display Ads give you the option to test different images, videos, and layouts to determine which captures the most attention.

For example, if you’re promoting a fitness app on behalf of a client, one version of your ad could feature a static image of a person using the app with the headline: “Track Your Progress Anytime.” The other version uses a short video showing real-time app features, ending with the same headline. By testing static images against video creatives, you uncover whether your audience responds better to dynamic, interactive visuals or clear, focused imagery.

Beyond format, you should also test variations in color schemes, imagery style, or layout to see what grabs attention and keeps users engaged. Each experiment brings you closer to understanding how to optimize your ad visuals for maximum impact.

Landing Pages

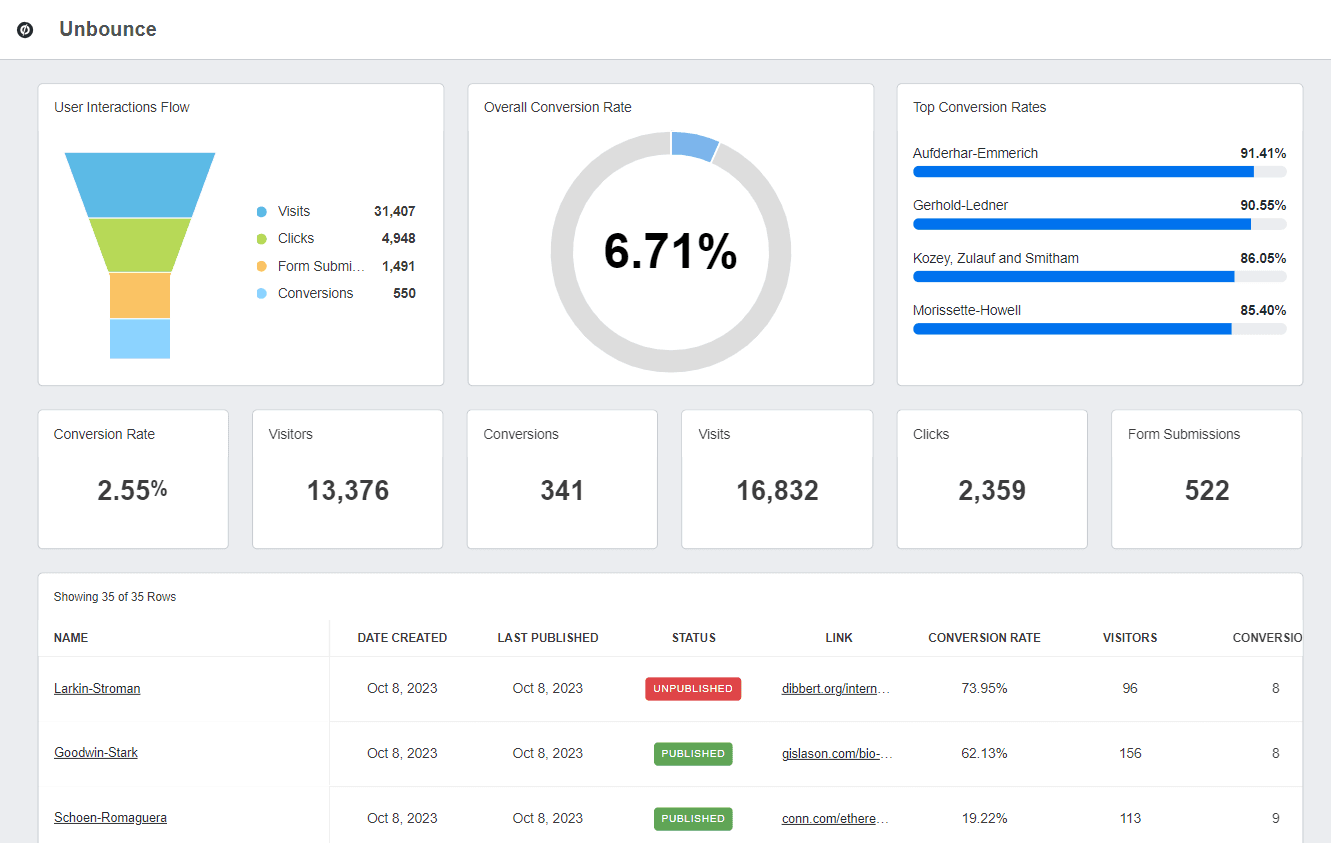

The post-click experience is just as important as the ad itself. A well-optimized landing page significantly boosts conversion. Just like with your Google Ads, be sure to A/B test copy, messaging, and CTA buttons on the landing pages themselves as a next step.

Don’t stop at the wording—visual elements matter too. Test how different colors, popular fonts, and button styles impact the visibility and effectiveness of your CTA. The “squint test” is a great tool—if you squint at your landing page and the CTA still pops out, it’s doing its job effectively!

One test we recently ran at AgencyAnalytics involved testing an exit intent popup. We sent traffic to the same landing page but showed 50% of the traffic a popup. The traffic that saw the popup converted 11% less than the traffic that did not see the popup and we did not apply the change to the base campaign.

Connect the Agency Analytics Unbounce integration in seconds to monitor and report on your landing page leads, conversions, and more. Get started with your free 14-day AgencyAnalytics trial.

Best Practices for Google Ads A/B Testing

A/B testing is only as effective as the strategy behind it. To turn your experiments into meaningful results, you need a clear plan that eliminates guesswork and delivers actionable insights. Here’s how to make every test count:

1. Define Your Goal

Don’t go into testing without a target. Think about what it is that you want to achieve. Whether it’s boosting Click-Through Rates (CTR), driving more conversions, or reducing Cost-Per-Click (CPC), your goal will shape the entire test. It’s your north star—helping you decide which variable is worth testing.

2. Choose One Variable To Test

To get clear results, focus on testing one variable at a time. Maybe it’s the ad headline, CTA, or even the display URL. Testing multiple elements simultaneously will muddy your results, leaving you unsure of what drove the change.

3. Formulate a Hypothesis

Next, put your testing goal into a clear statement. A strong hypothesis predicts the outcome of your test. For example: “If we use a benefit-focused headline, our CTR will increase by 10%.”

This gives you a benchmark to measure success and ensures you’re testing purposefully, not just out of curiosity.

4. Set Up the Test Through Your Google Ads Account

Use Google’s built-in tools, like Campaign Experiments or Ad Variations, to streamline the process. Be sure to:

Split traffic evenly between variations.

Keep the test environment as controlled as possible.

This ensures your results are reliable and not skewed by uneven exposure.

5. Run the Test for a Sufficient Duration

Testing takes time. You might need 2–4 weeks to gather statistically significant data depending on your traffic volume. The larger your sample size, the more meaningful your results. Resist the urge to stop the test early—premature conclusions often lead to wasted ad spend.

6. Analyze Results

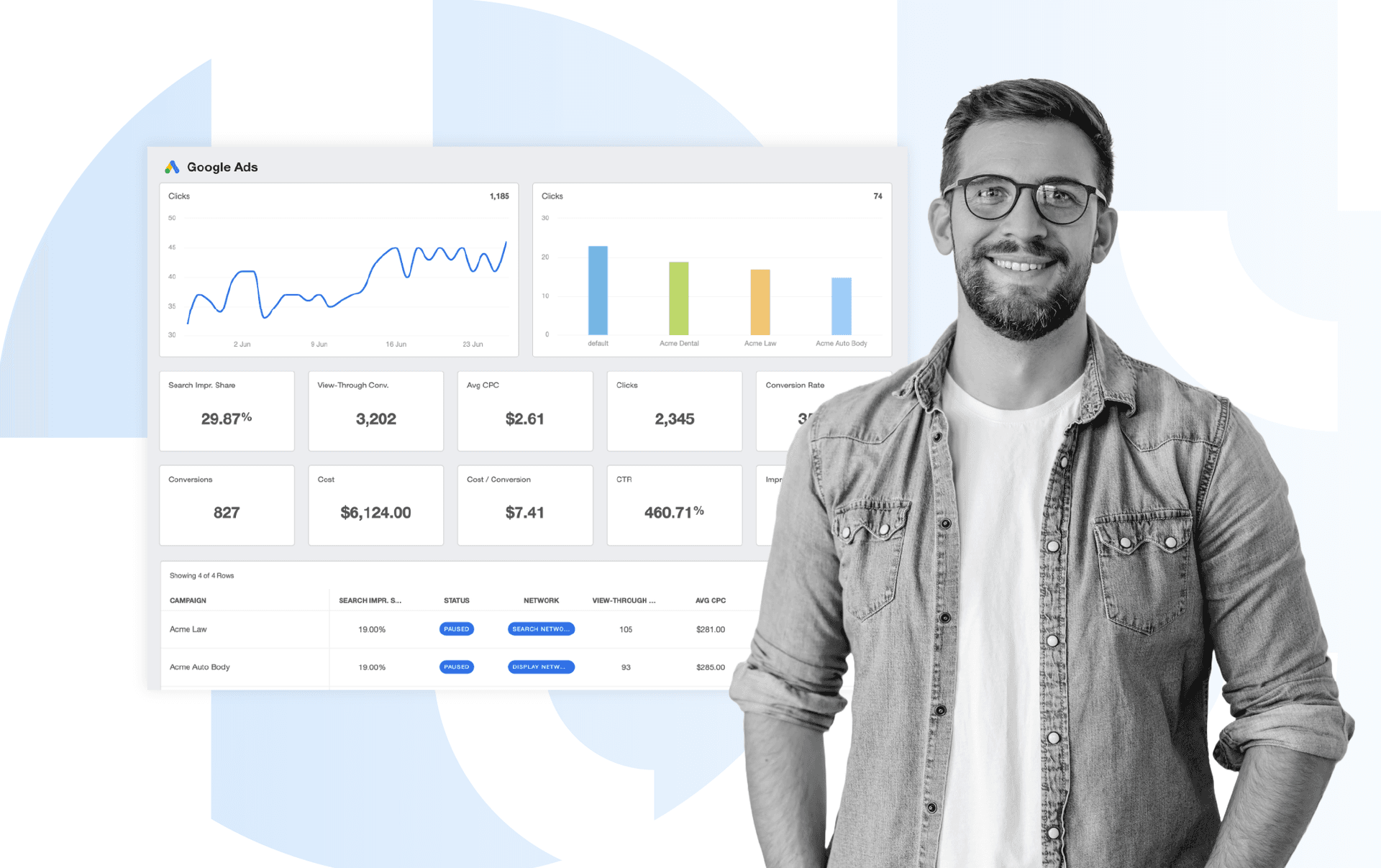

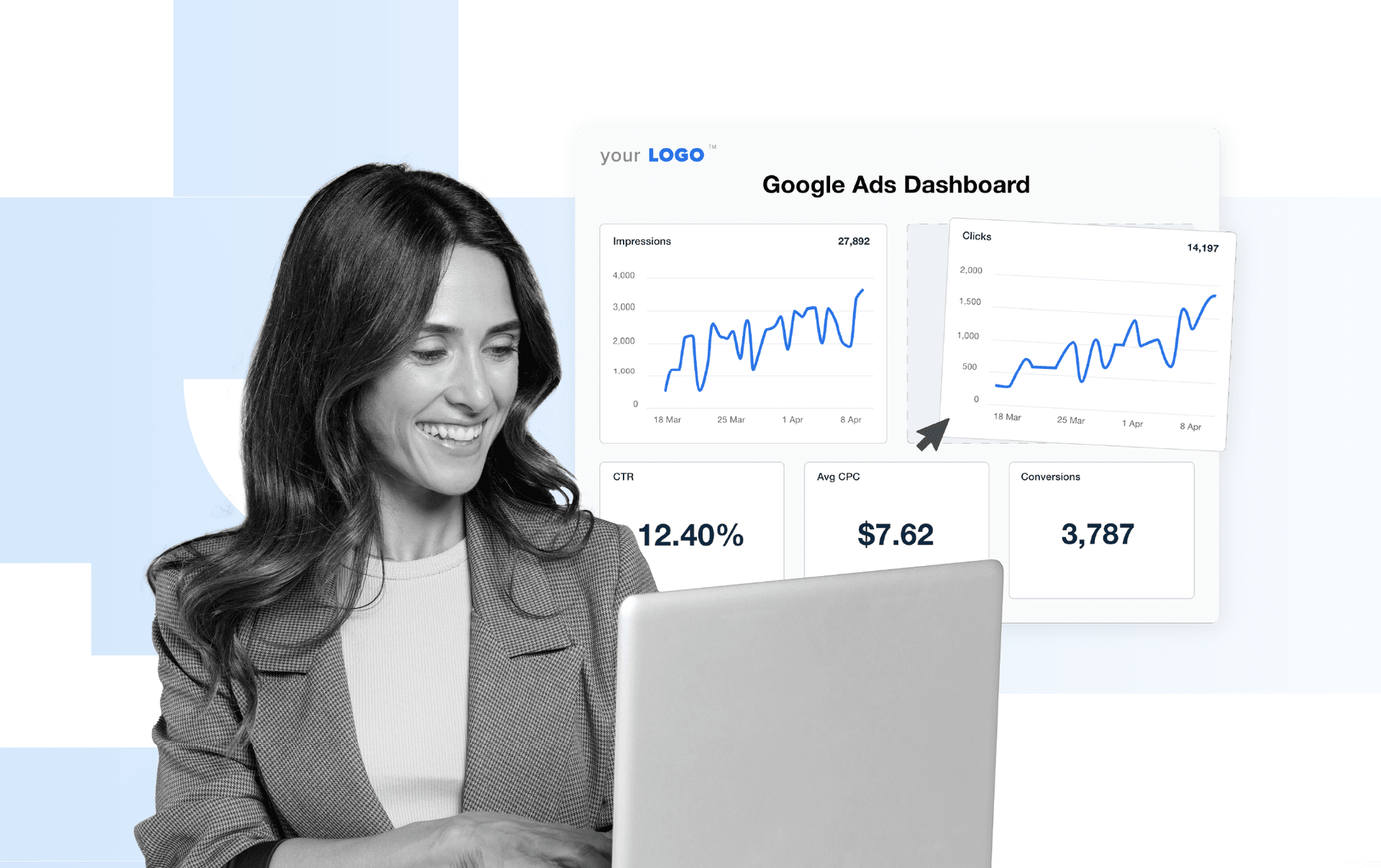

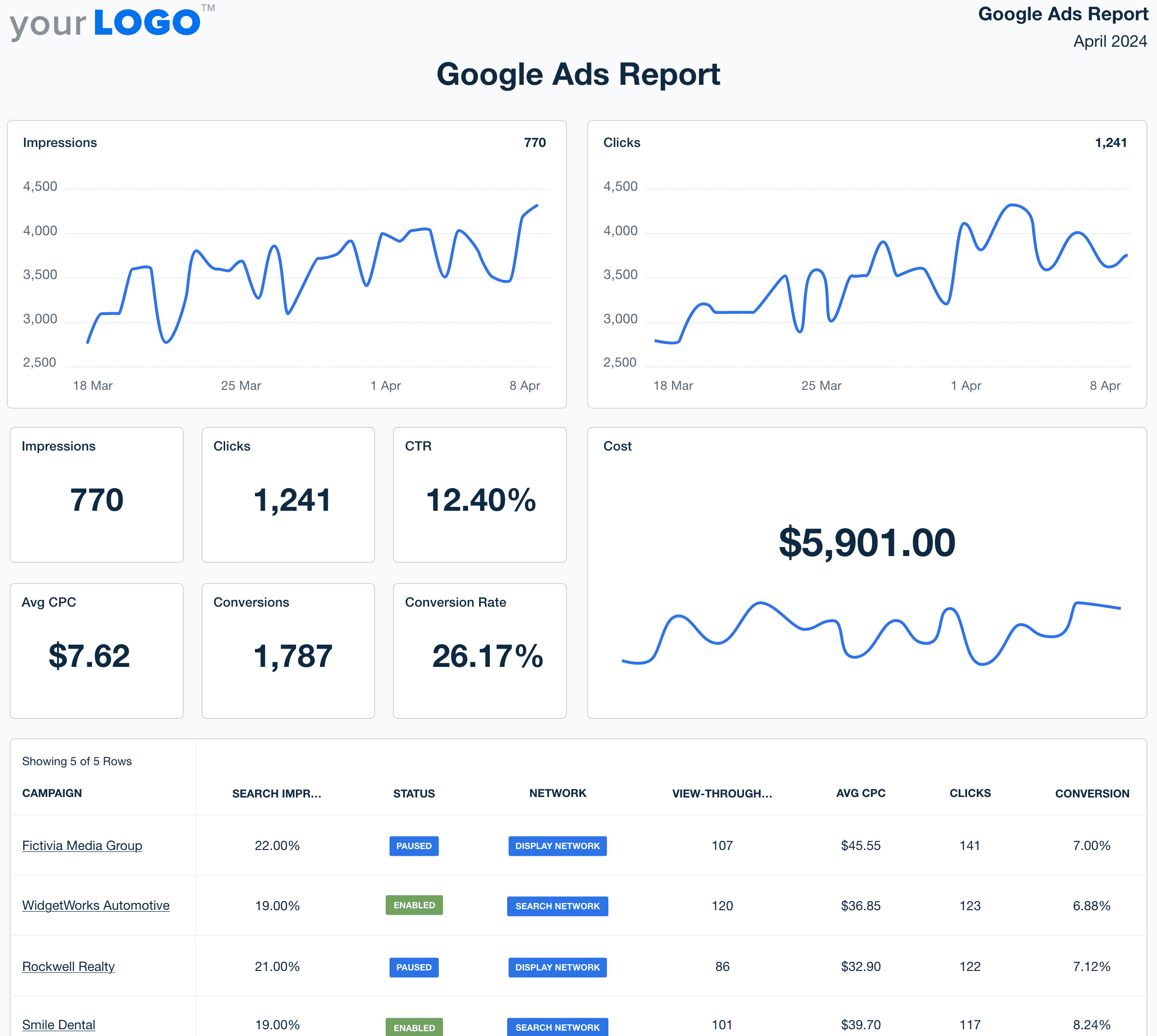

Tracking key metrics like click-through rates (CTR), conversion rates, and cost-per-conversion is essential to understanding A/B test results. Tools like customizable dashboards make consolidating this data and gaining actionable insights easy. For example, AgencyAnalytics offers a pre-built Google Ads report template designed to simplify this process. Consider tools that consolidate your Google Ads data alongside metrics from other campaigns to get a comprehensive view of your performance. This broader perspective reveals cross-channel insights and helps you refine your strategy.

Use the pre-built Google Ads Dashboard template to present your client’s data in an easy-to-understand, visually appealing format. Try it for yourself with the AgencyAnalytics 14-day free trial.

In cases where your test results are inconclusive, AgencyAnalytics also helps you dig deeper. Layer in insights from other channels to determine if external factors influenced performance, or pivot to test more significant changes with the confidence that your tracking is accurate and comprehensive.

7. Implement and Iterate

Found a winner? Great—now apply the insights to your campaign. But remember, A/B testing is a process, not a one-time event. Keep experimenting to optimize performance. If your hypothesis didn’t pan out, adjust your approach and test again.

8. Document Findings

Keep a record of every test—your hypotheses, results, and insights. Over time, this becomes a treasure trove of knowledge, helping to avoid duplicate efforts and inform future strategies. A simple spreadsheet works wonders for organizing these details.

By sticking to this structured approach, you’ll maximize the effectiveness of your A/B tests and uncover strategies that truly move the needle. Start small, stay focused, and let the data guide your next big win.

The Role of Critical Thinking in Google Ads A/B Testing

A/B testing isn’t just about running experiments—it’s about interpreting the results and using those insights to drive smarter decisions. Critical thinking is your superpower here, allowing you to connect the dots, question assumptions, and refine your strategy.

While tools like automated bidding and campaign experiments simplify the process, they don’t always align perfectly with your client’s unique goals. That’s where critical thinking bridges the gap between data and actionable insights.

Here’s why this skill is so important:

Objective Decision-Making: When test results defy expectations, critical thinking helps you dig into the “why.” It’s not about being right—it’s about understanding what’s best for the campaign. Did external factors, like seasonality or competitor activity, play a role? Ask yourself: Does the data tell the full story, or do I need to investigate further?

Balancing Automation and Judgment: Google’s AI tools are powerful but not infallible. Critical thinking allows you to assess when to let automation run its course and when to step in. For example, are automated bidding strategies prioritizing clicks but not delivering quality leads? Use your judgment to adjust accordingly.

Understanding the Audience: Remember, neither you nor your client are the end user. Critical thinking helps you evaluate results from the target audience's perspective, focusing on what truly resonates with them. Ask: Are we meeting their needs and expectations, or just assuming we are?

Tips for Applying Critical Thinking to A/B Testing:

Here are a few ways to make sure you’re practicing critical thinking when running Google Ads campaigns:

Dig Into the Data: Don’t stop at surface-level metrics like CTR or impressions. Look deeper into conversion paths, engagement rates, and time-on-site to get the full picture.

Ask the Right Questions: Dig deeper to ask yourself questions like: "What might have influenced these results outside the test?", "Is this outcome aligned with the campaign’s primary goal?", and "How do these findings compare to previous tests or benchmarks?"

Evaluate External Factors: Consider variables like changes in consumer behavior, market trends, or platform updates that could skew results.

Challenge Your Assumptions: Just because a strategy has worked before doesn’t mean it always will. Be open to testing bold variations or revisiting underperforming ideas with a fresh perspective.

Test Strategically: Use insights from previous tests to inform your next steps. For instance, if an ad’s performance improved with a benefit-driven headline, test other benefit-focused variations to refine the winning approach further.

Data-Driven Campaigns Win Every Time

A/B testing is the backbone of successful Google Ads campaigns, helping you deliver better campaign results with less wasted spend. By testing variables like headlines, CTAs, and landing pages, you arm your team with accurate data on what resonates most with your client’s audience to drive conversions further.

Written by

Lindsay Casey is the Paid Campaign Manager at AgencyAnalytics, specializing in optimizing digital ad performance and driving results. When she’s not diving into campaign data, you’ll find her exploring new trails, planning her next adventure, or getting lost in a great book.

See how 7,000+ marketing agencies help clients win

Free 14-day trial. No credit card required.

![The Ultimate Google Ads Optimization Checklist [Guide & Tips] An Easy to Follow Guide to Google Ads Optimization + a Downloadable Checklist](/_next/image?url=https%3A%2F%2Fimages.ctfassets.net%2Fdfcvkz6j859j%2F1RGRDTvZOx2bH3PCJMjDsD%2Fc239f0aed512ea0e761f3713dd6e59ac%2FGuide-to-Google-Ads-Optimization-Checklist.png&w=1920&q=75)