Blocked By Robots.txt

SEO Analysis

Identify and rectify unintentional blocks that might hinder SEO.

Content Audit

Ensure high-value content is accessible to search engines.

Visibility Check

Maintain optimal website visibility and prevent indexing issues.

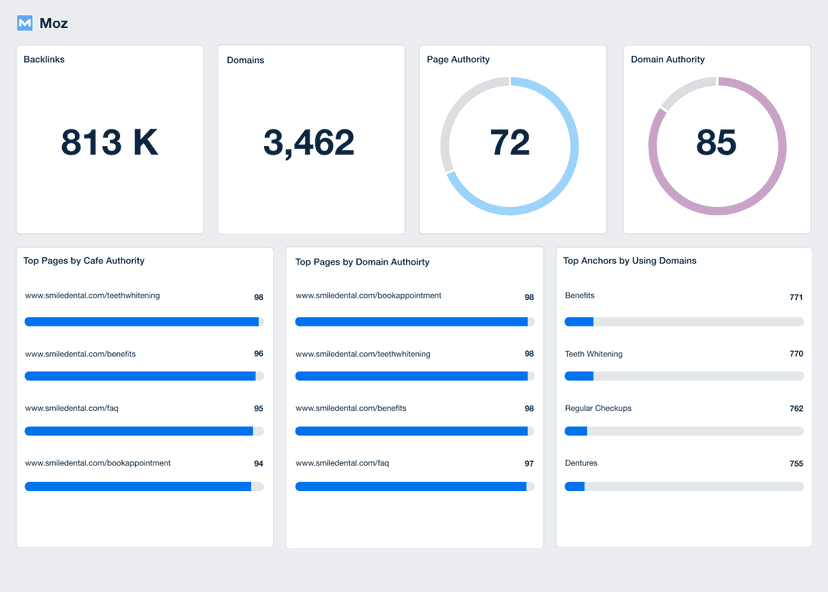

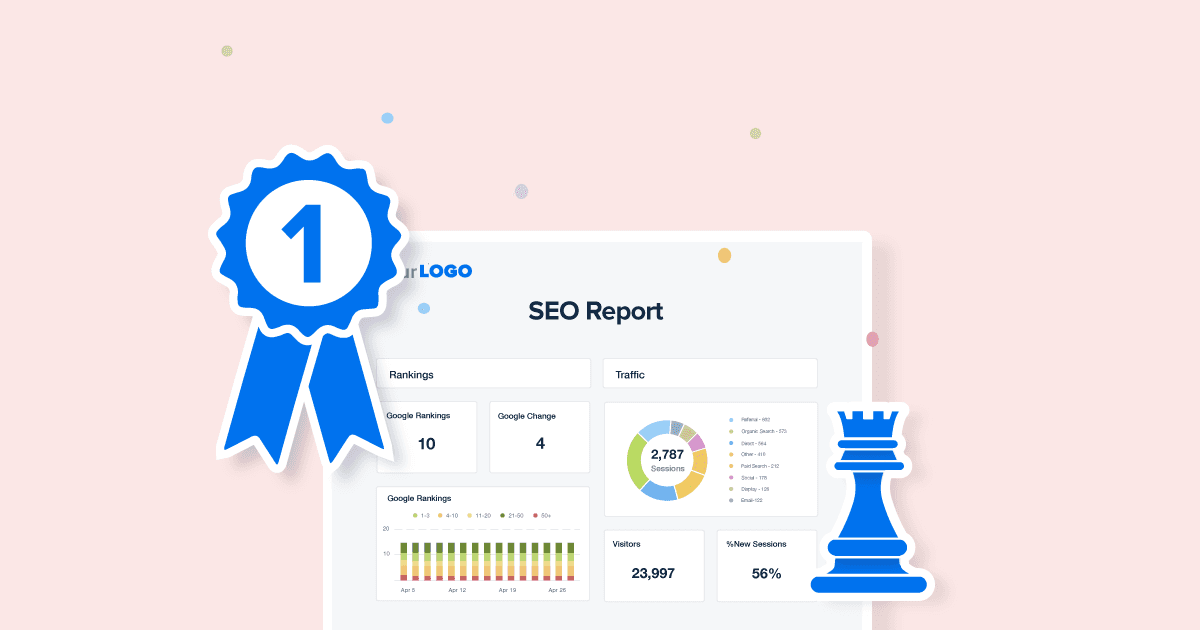

Client Reports

Highlight effective site management and SEO enhancements.

The Impact of Blocked by Robots.txt on SEO

Blocked by Robots.txt serves as a key indicator of a website's accessibility to search engines. When essential pages are mistakenly blocked, it often will significantly impair a site's search engine ranking and visibility. However, appropriately using this function to prevent indexing of irrelevant pages enhances a site's overall SEO performance.

Monitoring and managing this metric ensures that valuable content is discoverable and ranks appropriately in search results, directly impacting a website's traffic and user engagement.

This metric is vital for maintaining an optimal balance between accessibility and exclusivity of content to search engines.

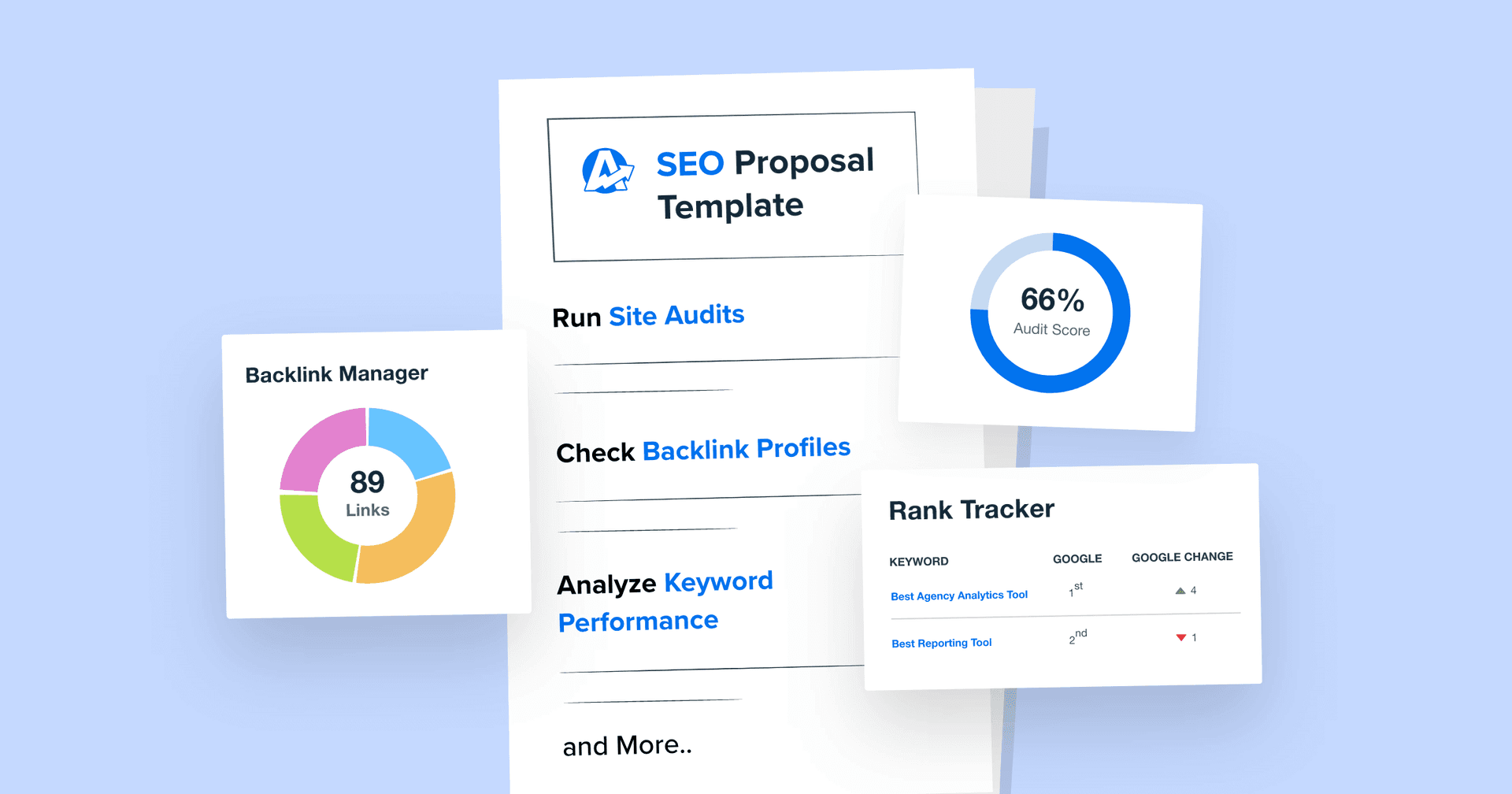

Stop Wasting Time on Manual Reports... Get SEO Insights Faster With AgencyAnalytics

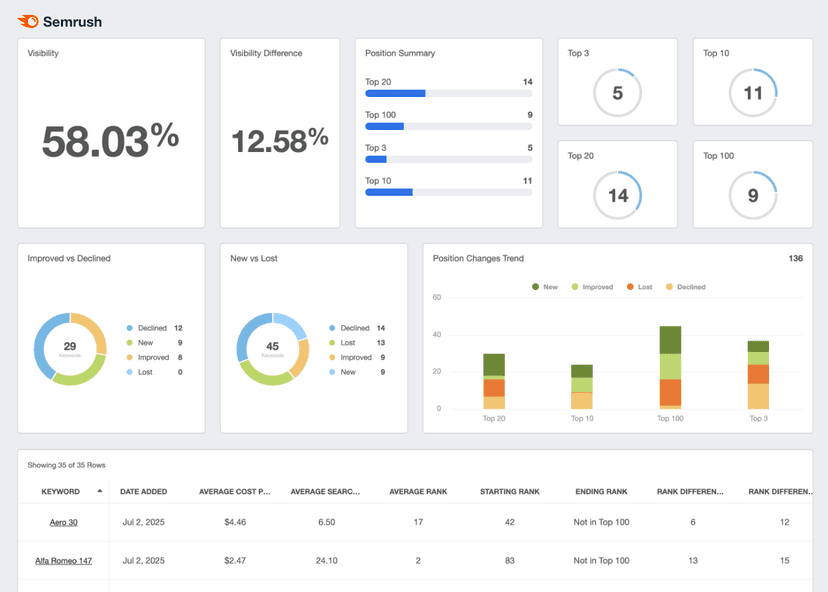

The Role of Blocked by Robots.txt Among Other KPIs

Blocked by Robots.txt is directly linked to the site's overall visibility and accessibility on search engines. For instance, when a page is blocked, it should not appear in search results, which in turn affects metrics like Organic Traffic, Page Views, and Click-Through Rate (CTR).

On the other hand, properly managed blocking directives, informed by user agent behavior and analytics, lead to an increase in relevant traffic and engagement metrics.

This metric also interacts with Technical SEO KPIs like Site Speed and Crawl Errors, as unblocked, optimized pages contribute to a healthier, more efficient site. Ensuring unblocked access to important content enhances individual page performance and contributes to the overall health and efficiency of the website.

How To Edit a Robots.txt File

The process for editing the robots.txt file varies across different content management platforms, such as a WordPress site, Wix, Shopify store, and Adobe Commerce (Formerly Magento).

For example, on WordPress sites or WooCommerce stores, access the robots.txt file through the WordPress dashboard. Many SEO plugins also offer an interface to edit this file directly. Navigate to the SEO plugin section, and look for a file editor or similar option. This method provides a user-friendly way to modify the robots.txt file without directly accessing server files, making it ideal for those managing a WordPress website.

As a hosted platform, Shopify generates a default robots.txt file, which can be viewed by appending /admin/robots.txt to the Shopify store URL. The platform offers a straightforward interface for editing this file, allowing for customized directives suited to the store's SEO strategy.

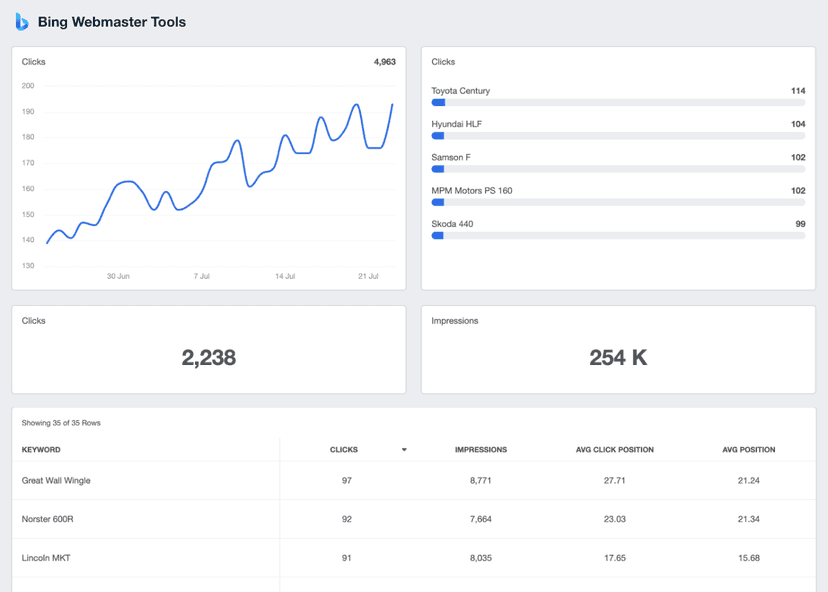

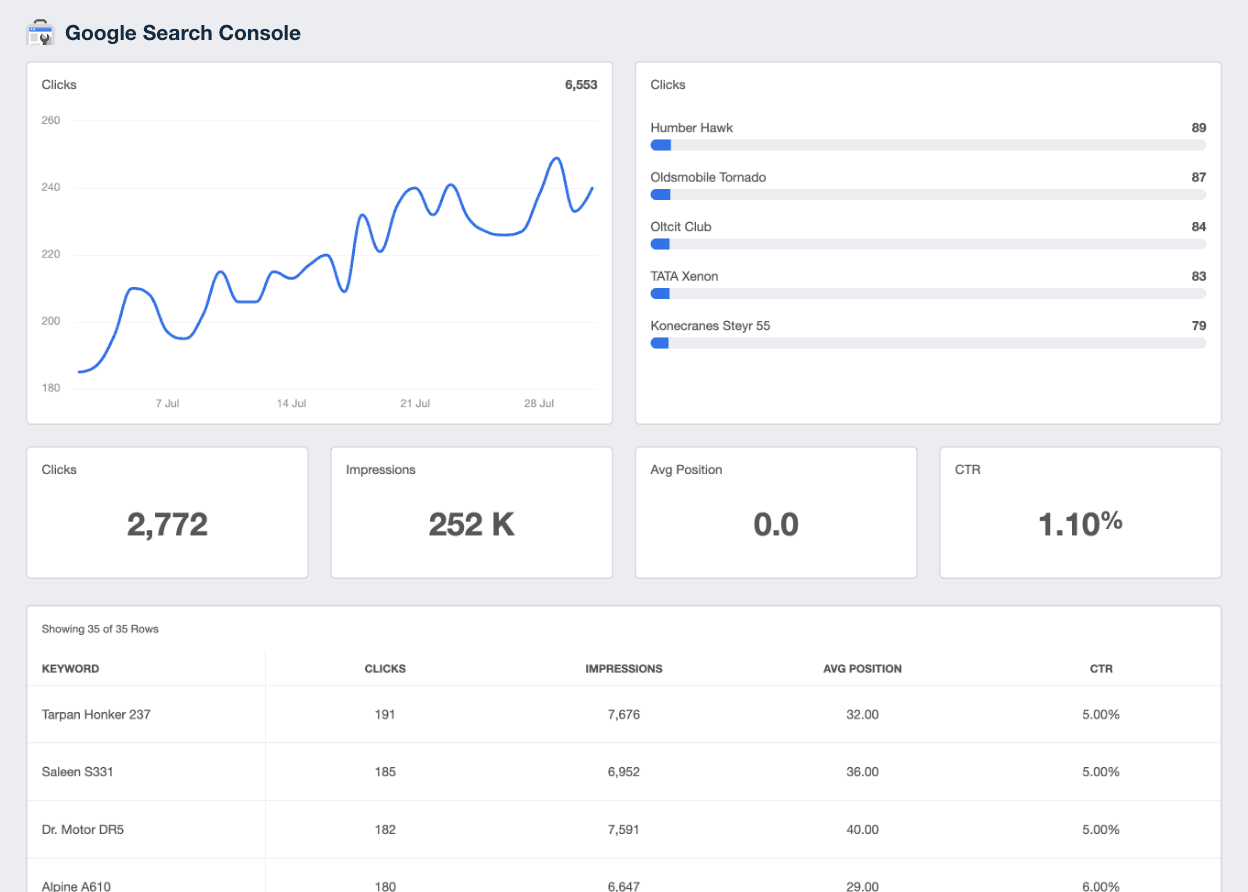

Calculating Blocked by Robots.txt Impact

Measuring Blocked by Robots.txt involves analyzing a website's robots.txt file and cross-referencing it with the site's content inventory. The key is to identify which pages are disallowed from search engine crawling. Using webmaster tools like Google Search Console, digital marketers easily view the list of URLs blocked by robots.txt. The process includes reviewing these URLs to determine if the block is intentional or a potential SEO issue. Additionally, regular audits of the robots.txt file are recommended to ensure that only the appropriate pages are blocked from indexing.

What Makes a Good Blocked by Robots.txt File

A well-managed Blocked by Robots.txt file typically shows a balance where only non-essential or duplicate pages are blocked. This ensures maximum visibility for important content while keeping irrelevant pages out of search engine indexes.

What Prompts a Robots.txt File Audit

A high number of important pages being inadvertently blocked indicates poor management. This leads to decreased visibility and potential loss in organic traffic, directly impacting a site's SEO performance.

How To Set Blocked by Robots.txt Benchmarks and Goals

Setting benchmarks involves understanding the site's structure and content strategy. Marketers need to establish which pages are critical for SEO and ensure these pages are not blocked from search engine crawlers. Historical data and analytics from the Google Search Console account and Google Analytics will help guide which pages have historically driven traffic and conversions, informing decisions on what should be accessible to search engines. Adjusting the robots.txt file should be a strategic decision based on this data, aiming to optimize the site's overall performance and search engine presence.

Why Blocked by Robots.txt Matters to Clients

In SEO, content is critical, whether it's a blog post or product details page. Putting effort into content that is accidentally blocked by a Robots.txt file could hurt traffic, engagement, and bottom-line revenue.

An optimal setup ensures that valuable content is indexed and ranks well, leading to increased organic traffic and potential conversions. Accidental blocking of important web pages often leads to missed opportunities, as these pages remain invisible to potential customers searching online.

Why Blocked by Robots.txt Matters to Agencies

For agencies, "Blocked by Robots.txt" is a critical metric reflecting their effectiveness in managing a client's SEO strategy. Proper management of this metric demonstrates an agency's capability to enhance a website's search engine performance.

It is a testament to their technical SEO skills, showing their proficiency in optimizing website visibility and ensuring that the client's key content is indexed correctly.

What To Do When a Page Is Indexed Though Blocked by Robots.txt

When a page is indexed despite being blocked by the robots.txt file, it may take a bit of detective work to investigate and resolve the discrepancy. This situation often arises when external links point to the blocked page, prompting search engines to index it despite the block. If Google found links to a blocked page, it may ignore the Robots.txt file instructions.

Another reason could be a delay in search engines updating their crawled data and the list of URLs indexed, resulting in a temporary mismatch.

To correct this issue, first verify the robots.txt file for correct syntax and ensure that the blocked URLs are accurately specified. If the page is still indexed, consider using a ‘noindex’ tag in the html header of the web page itself, which is a more direct instruction to search engines not to index that page.

If the problem persists, use the Removals Tool in Google Search Console to immediately remove the page from Google Search. This will remove the current page from the index, but Google may re-index the page in the future.

Save Time and Money by Automating Your Client Reporting

Best Practices When Analyzing and Reporting on Blocked by Robots.txt

Analyzing and effectively reporting Blocked by Robots.txt is essential for optimizing website performance in search engines. This process not only identifies technical issues but also provides insights for strategic SEO improvements.

Data Accuracy

Ensure accurate measurement of blocked URLs. Accurate data is fundamental for effective analysis and subsequent SEO strategy adjustments.

Timeframe Analysis

Examine changes over time. Monitoring this metric over different periods will reveal trends and the impact of SEO strategies.

Channel Comparison

Evaluate across different search engines. Various search engines may interpret robots.txt differently, making cross-channel analysis valuable.

Campaign Correlation

Correlate with campaign performance. Understanding how blocked pages affect overall campaign results guides more effective SEO strategies.

Trend Interpretation

Identify and investigate anomalies. Sudden changes in this metric may indicate potential issues or opportunities for SEO enhancement.

Contextual Analysis

Analyze in relation to overall site health. This metric should be considered as part of a broader SEO audit for comprehensive insights.

Google Search Console Dashboard Example

Related Integrations

How To Improve Blocked by Robots.txt

Effectively managing the Robots.txt file is key to optimizing a website's SEO. Here are three actionable tips to ensure this metric positively impacts a site's search engine performance.

Audit Regularly

Perform consistent audits of the robots.txt file to ensure only the appropriate pages are blocked from search engine indexing.

Analyze Impact

Regularly check the impact of blocked pages on overall site traffic and SEO performance to understand their influence.

Educate Stakeholders

Ensure that all team members understand the importance of robots.txt settings and the impact on SEO.

Related Blog Posts

See how 7,000+ marketing agencies help clients win

Free 14-day trial. No credit card required.